Ultramodality: The Next Step to Multimodal Content.

For years, we’ve talked about multimodal content—the blend of text, images, and video. But as artificial intelligence continues its rapid evolution, a new concept is emerging: ultramodality. It’s a shift beyond simply combining different content formats. Ultramodality is about creating a dynamic, adaptive, and sensory-rich experience that personalizes itself in real time.

This isn’t just a futuristic buzzword; it’s the next frontier of digital interaction. As one expert put it, “If creativity had a pulse, AI just gave it a second heartbeat.” With ultramodality, that heartbeat is becoming more synchronized with each user’s unique needs and context.

This post will explore the world of ultramodal content, from its core differences with multimodality to its practical applications in SEO, user experience, and brand communication. We’ll look at how AI is driving this change and what it means for the future of content.

What’s the difference between ultramodality and multimodality?

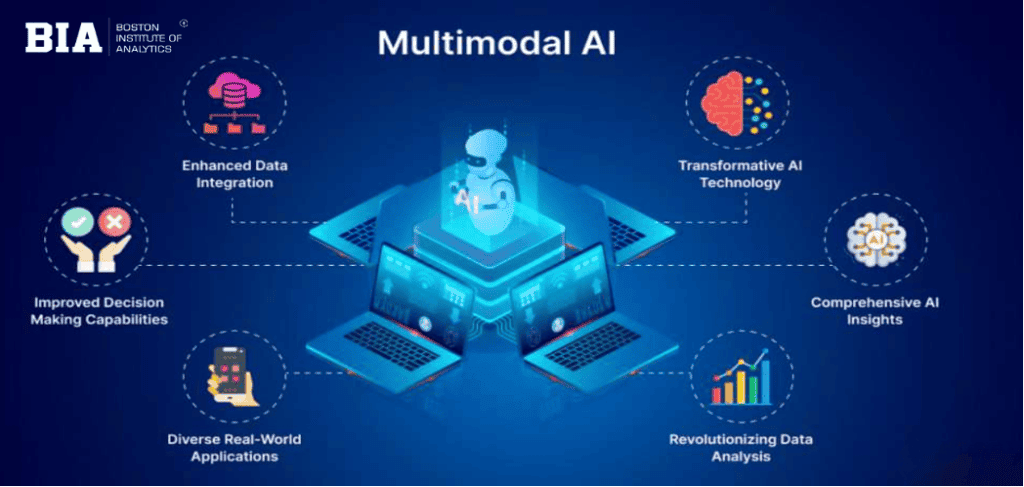

Multimodality is about including different types of media in your content. A blog post with images and an embedded YouTube video is a classic example. It’s a static but richer experience than text alone. Multimodal AI systems are those that can process and understand various data inputs, such as text, images, and audio. As described in recent analyses, “multimodal AI can process multiple inputs at once: images, video, audio, and text.”

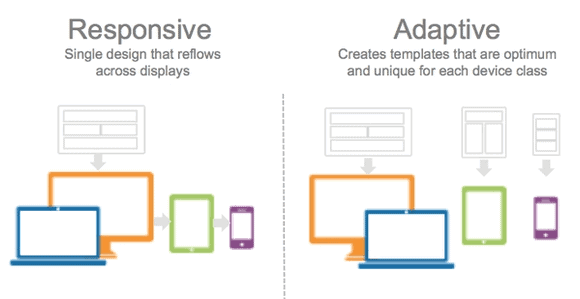

Ultramodality, on the other hand, takes this a step further. It’s not just about the presence of multiple formats; it’s about the dynamic interplay and adaptation of those formats. An ultramodal experience might change its presentation based on user behavior, device, location, or even biometric data. It’s a living, breathing form of content that responds to you.

Think of it as the difference between a curated playlist and a DJ who reads the room. Multimodal content is the fixed playlist; ultramodal content is the DJ, adjusting the music, tempo, and even the lighting based on the crowd’s energy. It’s a deeply personalized and immersive experience.

The concept of ultramodality is rooted in the rapid advancement of AI technologies. Modern multimodal AI systems, as described by industry experts, are “Systems that can see, hear, speak, and imagine—all at once.” These systems are already being deployed in various applications, from medical diagnostics that combine image scans with patient data to fraud detection tools that analyze voice patterns and behavioral data simultaneously. Ultramodality builds on this foundation by adding a layer of real-time responsiveness and personalization that transforms passive content consumption into an active, co-created experience.

Here’s a table to break down the key distinctions:

| Feature | Multimodality | Ultramodality |

| Core Concept | Combining multiple content formats (text, image, video). | Dynamically adapting and integrating multiple content formats and sensory inputs. |

| User Experience | Static; the same for every user. | Dynamic and personalized; changes based on user interaction and context. |

| Interaction | Passive consumption of different media types. | Interactive and responsive; content adapts in real-time. |

| Technology | Standard web technologies (HTML, CSS, embedded media). | AI, machine learning, real-time data processing, sensory feedback devices. |

| Example | A blog post with images and an embedded video. | A website that changes its layout, text complexity, and media based on a user’s reading speed and engagement, potentially including haptic feedback on a mobile device. |

How to combine text, image, audio, and sensory data.

The magic of ultramodality lies in its ability to weave together diverse data streams into a single, cohesive experience. This goes beyond simply placing a video next to a paragraph of text. It involves a deeper integration, where each element is aware of and responsive to the others, all orchestrated by AI.

The Role of AI in Orchestration.

At the heart of an ultramodal system is an AI that acts as a conductor. It processes a constant flow of inputs—user interactions, environmental data, and even biometric feedback—to make real-time decisions about what content to present and how. This is where the concept of “adaptive content” becomes critical. As one report on real-time content adaptation notes, this approach can lead to a 35% increase in session durations and a 22% rise in conversion rates.

Integrating Sensory Data.

The most groundbreaking aspect of ultramodality is its potential to incorporate sensory data, moving beyond the screen to create truly immersive experiences. This includes:

- Haptic Feedback: Mobile devices can provide subtle vibrations or textures that correspond to on-screen actions, enhancing user engagement. Research has shown that combining haptic and visual feedback can create a greater sense of presence than either modality alone.

- Ambient Sound: An ultramodal website could adjust its background audio based on the user’s perceived mood or the time of day, creating a more calming or energizing environment.

- Scent and Taste: While still in their infancy, technologies are being developed to introduce smells and even tastes into digital experiences, opening up new possibilities for food, travel, and retail brands.

This multi-sensory approach is not just a gimmick; it’s a powerful way to create deeper emotional connections with users. As one article on the future of digital experiences puts it, we are moving towards an “Internet of Senses,” where we can “experience smell, taste, textures and temperature digitally.”

Building the Technical Infrastructure.

Creating ultramodal experiences requires a sophisticated technical infrastructure. At the core, you need several key components working in harmony. First, a dynamic content management system that can serve different versions of content based on user attributes and behavior.

Second, AI and machine learning platforms capable of processing data in real time and making intelligent decisions about content presentation. Third, analytics tools that can track user interactions across multiple touchpoints and feed that data back into the system.

The good news is that these technologies are becoming more accessible. The adaptive AI market is experiencing explosive growth, with a compound annual growth rate of 42.6% projected from 2023 to 2030. This means that tools and platforms for creating ultramodal experiences are becoming more affordable and easier to implement, even for smaller organizations.

Cloud-based AI services from major providers have democratized access to machine learning capabilities that were once available only to tech giants.

Use cases in SEO and user experience.

Ultramodality is poised to revolutionize how we approach search engine optimization (SEO) and user experience (UX). By creating more personalized and engaging content, businesses can build stronger relationships with their audiences and achieve better visibility in a changing search landscape.

Real-time adaptation and AI video content.

One of the most significant shifts in recent years has been the rise of video as a primary source of information. Google’s AI Overviews are increasingly pulling content from platforms like YouTube and TikTok, treating video as a credible and authoritative source. As one industry analysis notes, “Google is redefining what counts as ‘credible’ source material.” This makes a strong case for a video-first approach to content strategy.

Ultramodal content takes this a step further by not just including video, but by making it adaptive. Imagine a tutorial video that adjusts its pacing based on the viewer’s engagement. If the system detects that a user is re-watching a particular section, it could automatically offer a more detailed explanation or a different camera angle. This level of personalization creates a stickier, more effective user experience.

This adaptive approach also has significant SEO benefits. By keeping users engaged for longer, you send strong signals to search engines that your content is valuable. The focus shifts from pure keyword optimization to experience optimization. As the digital landscape evolves, search visibility will depend less on traditional ranking factors and more on the quality of the user experience.

The numbers tell a compelling story. Websites implementing real-time content adaptation have seen a 40% reduction in cost per qualified lead and a 25% increase in lead quality scores. These are not marginal improvements; they represent a fundamental shift in how content performs. When your content adapts to meet users where they are, both literally and figuratively, the results speak for themselves.

Moreover, the rise of AI-powered search is changing the rules of the game. Google’s AI Overviews are now pulling from a diverse array of sources, including social media platforms like TikTok and Instagram Reels, not just traditional websites. This means that brands need to think beyond their own properties and create content that can live and adapt across multiple platforms. The goal is not just to rank for keywords, but to become a trusted source that AI systems cite when answering user queries.

The future of brand communication formats.

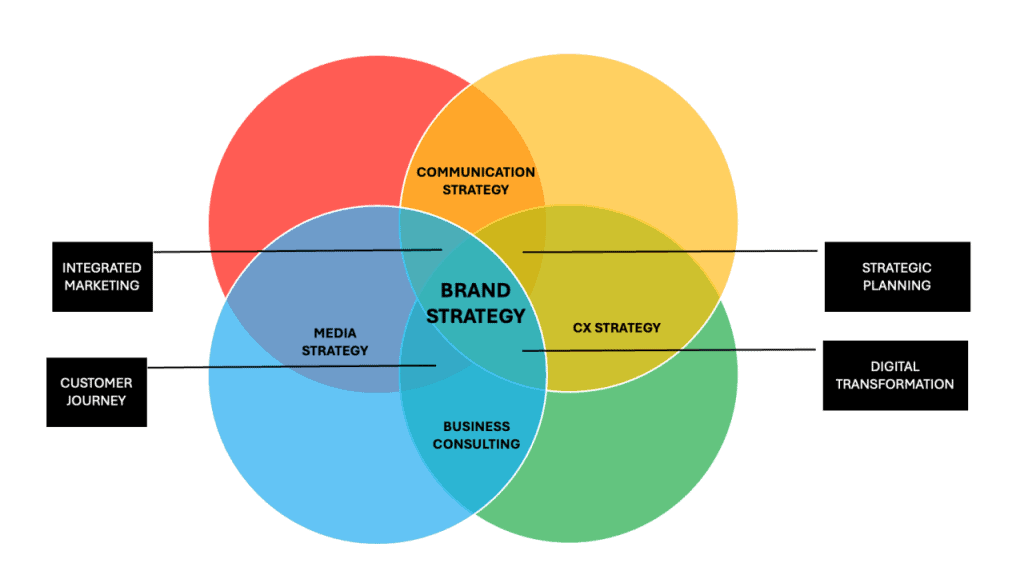

For brands, ultramodality opens up a world of new communication possibilities. It’s a chance to move beyond one-size-fits-all messaging and create deeply resonant experiences. The future of brand communication is not about shouting the loudest; it’s about listening the closest and responding in the most relevant way.

An ultramodal brand experience could look something like this: a potential customer visits a product page, and the content adapts in real time. The technical specifications might be presented in a simplified format for a novice user, while a more experienced user might see detailed charts and data.

The product images could change to reflect the user’s location or even the time of day. This is the essence of adaptive content—delivering the right information, in the right format, at the right time.

This level of personalization builds trust and fosters a sense of connection. It shows that the brand understands and respects the user’s individual needs. In a world saturated with generic marketing messages, this kind of tailored communication is a powerful differentiator. It’s a move from broadcasting to conversation, and it’s the future of building lasting brand loyalty.

Consider the implications for different industries. In e-commerce, an ultramodal product page could adjust its emphasis based on what matters most to each shopper—sustainability credentials for environmentally conscious consumers, technical specifications for tech enthusiasts, or social proof and reviews for those who value peer recommendations. In education, learning materials could adapt their complexity and pacing based on a student’s comprehension level, providing additional support where needed or accelerating through material already mastered.

The autonomous AI market, which includes many of the technologies that power ultramodal experiences, is expected to reach $62 billion by 2026. This massive investment reflects the belief that adaptive, intelligent systems will become the standard rather than the exception. Brands that embrace this shift early will have a significant competitive advantage, while those that cling to static, one-size-fits-all content risk being left behind.

The Dawning of the Ultramodal Era.

The transition to ultramodality is not a distant dream; it’s a gradual evolution that is already underway. The building blocks—AI, machine learning, and advanced content management systems—are becoming more accessible and powerful. The adaptive AI market, for instance, is projected to grow at a compound annual growth rate of 42.6% from 2023 to 2030, a clear indicator of the rapid adoption of these technologies.

This new era of content is not just about technology; it’s about a fundamental shift in how we think about communication. It’s a move away from the static, one-way broadcast model of traditional media towards a more dynamic, interactive, and conversational paradigm. The lines between content creator and content consumer are blurring, as users become active participants in shaping their own digital experiences.

The Future of Content Experience.

Looking ahead to 2026 and beyond, the content experience will be almost unrecognizable from what we know today. We can expect to see a number of key trends emerge:

- Hyper-Personalization: Content will be tailored to an unprecedented degree, taking into account not just demographics and browsing history, but also real-time emotional and cognitive states. Imagine a news article that adjusts its language and tone to be more empathetic if it detects that the reader is feeling stressed.

- Immersive Storytelling: The boundaries between the digital and physical worlds will continue to dissolve. Augmented reality (AR) and virtual reality (VR) will become more mainstream, allowing for truly immersive storytelling experiences. A history lesson could transport students to ancient Rome, while a product demonstration could allow customers to virtually hold and interact with a new device.

- Proactive Assistance: AI-powered agents will anticipate our needs and provide us with relevant information and content before we even think to ask for it. Your smart home might display a recipe on the kitchen counter as you start to prepare dinner, or your car might play a relaxing playlist after a stressful day at work.

This future is not without its challenges. There are significant ethical considerations around data privacy and the potential for manipulation. As we create more intimate and personalized digital experiences, it’s crucial that we do so in a way that is transparent, respectful, and empowering for users.

Preparing for the Ultramodal Web.

For content creators, marketers, and developers, the shift to ultramodality presents both a challenge and an opportunity. It requires a new set of skills and a new way of thinking, but it also opens up exciting possibilities for creating more engaging and effective content. Here are a few steps you can take to prepare for the ultramodal web:

- Embrace a Data-Driven Mindset: Ultramodality is built on a foundation of data. Start by collecting and analyzing data about your audience to understand their needs, preferences, and behaviors. This will be the fuel for your personalization efforts.

- Think in Ecosystems, Not Silos: Break down the traditional silos between different content formats and channels. Think about how your content can work together as a cohesive ecosystem, with each element reinforcing and enhancing the others.

- Invest in the Right Technology: To deliver truly ultramodal experiences, you’ll need the right tools. This includes a flexible and powerful content management system, as well as AI and machine learning platforms that can handle real-time data processing and personalization.

- Experiment and Iterate: The world of ultramodality is still new and evolving. Don’t be afraid to experiment with different approaches and to learn from your successes and failures. The key is to be agile and to constantly iterate on your content and your strategy.

Conclusion: The Next Chapter in Digital Communication

Ultramodality represents a paradigm shift in how we create and consume digital content. It’s a move away from the static, one-size-fits-all approach of the past and towards a future that is more dynamic, personalized, and immersive. While the road ahead is still being paved, the direction is clear. The future of content is not just about what we say, but how we say it—and, more importantly, how our audience experiences it.

By embracing the principles of ultramodality, we can create more meaningful and impactful connections with our audiences, and unlock the full potential of digital communication. The journey is just beginning, but one thing is certain: the content experience of 2026 will be a world away from the one we know today.