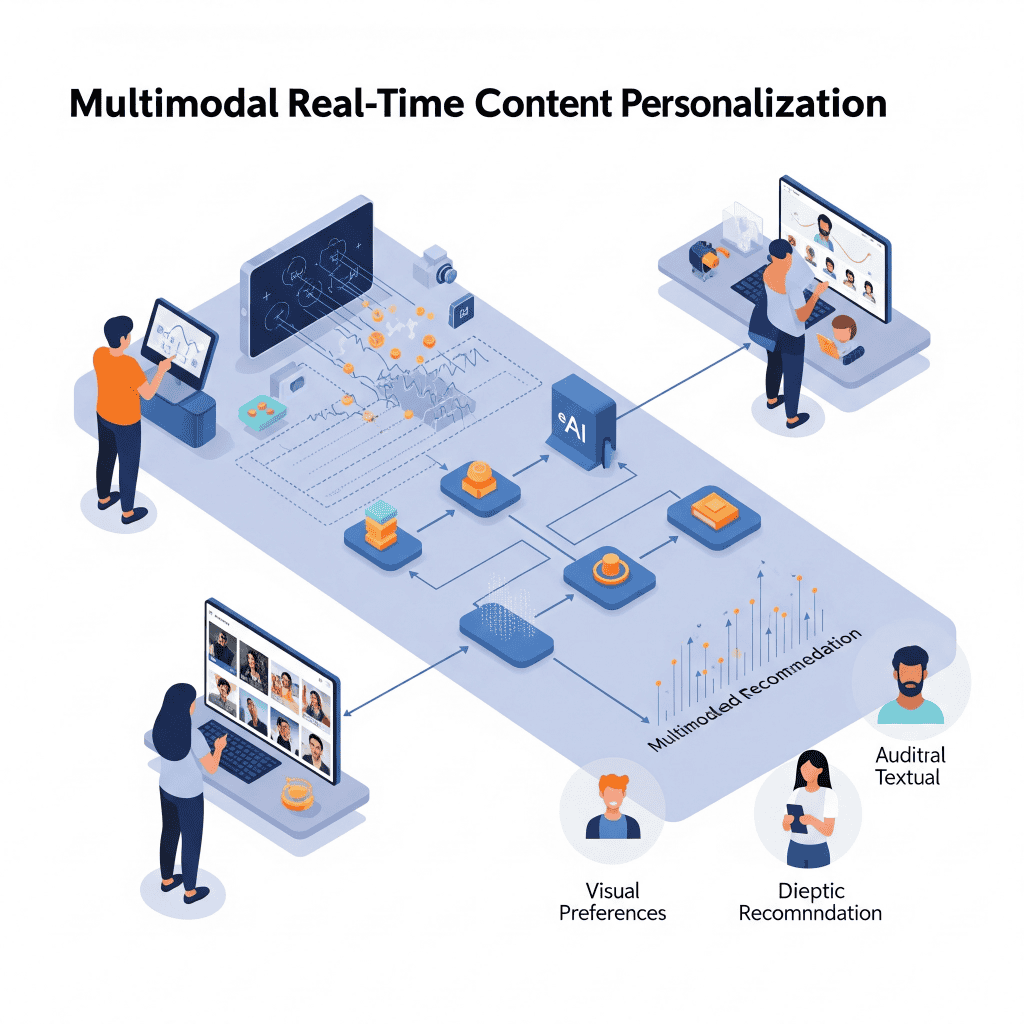

Multimodal Real-Time Content Personalization.

Just a few years ago, content personalization meant, at most, a name in an email subject line or “recommended products” on a store’s website. Today, with the development of multimodal artificial intelligence, we are experiencing a completely new era.

One where systems analyze not only what we click, but how we speak, how we look, how we move across the screen – and based on that, they instantly adapt what they show us. This isn’t science fiction. This is a reality that marketing and technology leaders are beginning to shape.

Welcome to the world of multimodal real-time content personalization – the future that’s happening right now.

From Classic Personalization to AI Understanding Context.

For decades, content personalization was primarily linked to the analysis of textual data: purchase history, products viewed, or IP location. Marketers created personas, segmented users, and tailored content to groups, not individuals. Thanks to the development of AI content personalization, we began to go a step further – systems learn the preferences of specific users. But it was only the emergence of multimodal AI that revolutionized this field.

🔀 What is Multimodality?

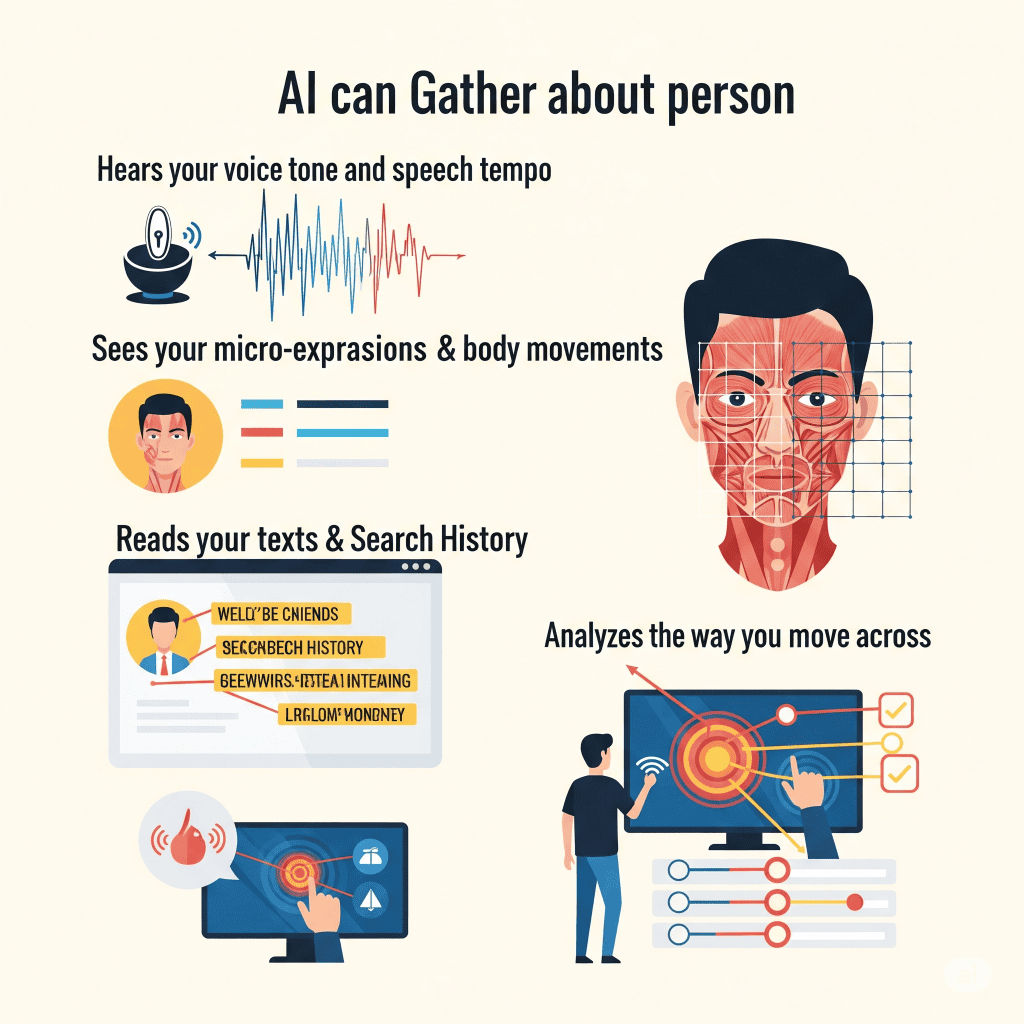

It’s the ability of a system to analyze different types of data simultaneously – text, image, sound, gestures, emotional signals. This is how the most advanced models work today, like GPT-4o, Gemini, or Claude. They can simultaneously “listen” to the user’s voice, interpret their facial expressions, and analyze the text of a query. All in real-time.

End of the “One Size Fits All” Era Just five years ago, personalization at most meant “Hi, Sarah!” in an email subject line and “Customers who bought this product also viewed…”. Marketers divided users into broad demographic categories and created content for entire groups, not individuals.

This era is definitively ending. Today, we are entering a world where artificial intelligence not only reads our clicks but feels our emotions, sees our reactions, and hears our tone of voice. And based on that – in a fraction of a second – it adjusts everything: content, communication tone, format, and even timing. This is not science fiction. This is a reality already being shaped by the world’s biggest tech brands.

The Revolution. No One Is Talking About Out Loud.

Multimodal AI is a breakthrough that is quietly revolutionizing how technology understands us.

Imagine a system that simultaneously:

Combines all this data into a coherent picture of your current state It’s like the difference between a black and white photograph and a 4K movie with surround sound. Suddenly the system has a complete picture – not just what you’re doing, but how you’re feeling and what you really need.

Technology That Has Senses.

Behind the scenes of this revolution are next-generation AI models – GPT-4o, Gemini 1.5, Claude Sonnet – which can simultaneously process text, image, sound, and context. It’s like giving a computer human senses.

But the real magic happens in four technological layers:

Layer 1. Multimodal data collection The system constantly “listens” – analyzing cursor movements, time spent in sections, voice tone in recordings, facial expressions (if you give consent), and even data from IoT devices like smartwatches or smart lighting.

Layer 2. Interpretation of emotions and context AI understands that “sigh + furrowed brows + long pause” are signs of frustration. That fast scrolling means haste or lack of interest. That evening logins have different intentions than morning ones.

Layer 3. Instant decision-making Within milliseconds, the system decides: what content to show, what tone to communicate in, whether to offer help, or give space. Layer 4: Continuous optimization

Every user reaction – click, scroll, response time – returns to the system as feedback. AI learns in real-time what works and what doesn’t.

Where It’s Already Working?

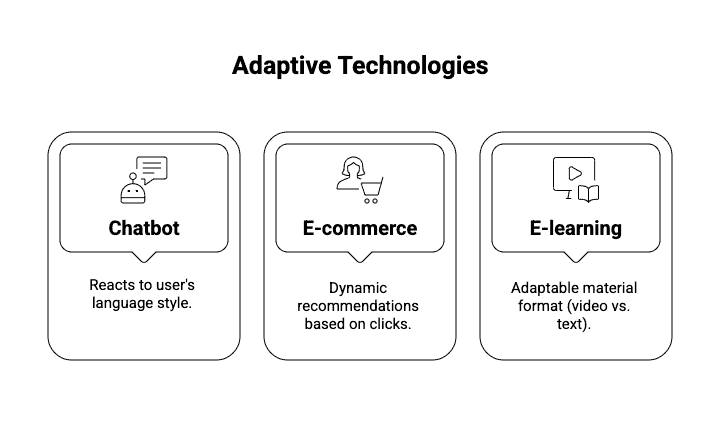

🛒 E-commerce: When the store reads your mood.

🛍️ Amazon is already testing systems that analyze customers’ micro-expressions through cameras while browsing products. If the system detects frustration on a face, it immediately simplifies the interface, shows fewer options, and offers chatbot assistance. When it notices enthusiasm, it amplifies the atmosphere by showing premium and limited edition products.

🧭 Alibaba has gone even further – their AI analyzes how customers scroll through products. Fast scrolling means haste – the system shows the most popular options at the top. Slow, careful scrolling signals that the customer has time to explore – then the AI presents more details and alternatives.

🎓 EdTech. Learning that adapts to you Khan Academy, powered by GPT-4, is revolutionizing online education. The system analyzes not only the correctness of answers but also reading speed, length of pauses before answering, and even voice tone during recorded answers.When AI detects that a student is struggling (long pauses, monotonous voice), it automatically suggests a break or changes the material format from text to interactive video. When it notices excitement (fast, confident answers), it increases the difficulty level and proposes additional challenges.

💼 HR and recruitment. Conversations that feel emotions Modern recruitment systems analyze not only candidates’ words but also voice tone, speech tempo, and micro-expressions. If AI detects stress or uncertainty, it can suggest to the recruiter to change the topic or tone of the conversation.

When it notices enthusiasm, it can delve deeper into a topic that seems to passionate the candidate. Companies like Unilever are already using such systems for initial candidate screening, but not for elimination – rather for better matching questions and conversation style to each candidate’s personality.

🤖 Customer Support. Bots with empathy The latest chatbots analyze not only the content of inquiries but also writing style, emojis used, and message length. Short, abrupt sentences are often a sign of frustration – the bot switches to a more empathetic mode, offers immediate help, and shortens replies. Long, detailed problem descriptions signal that the client has time and needs precise instructions.

Why This Will Change Your Business – Regardless of Industry.

Multimodal personalization is not just a gadget for tech giants. It’s a fundamental shift in how companies build relationships with customers. Here’s why you should be interested:

1️⃣ Increased engagement through true understanding.

When a customer feels understood on an emotional level, their engagement grows exponentially. Research shows that personalized experiences can increase engagement by as much as 300%. But multimodal personalization goes a step further – it not only shows the right content but does so at the right time, in the right tone.

2️⃣ Conversions that grow on their own Real-time personalization reduces friction in the customer journey. Instead of forcing everyone to follow the same path, the system creates a unique path for each user. The result? A 15-25% increase in conversions is standard, but some companies report improvements of up to 50%.

3️⃣ Reduced frustration and abandonment How many times have you abandoned an app or website because it was too complicated, slow, or simply didn’t understand what you needed? Multimodal systems detect signs of frustration before the customer disconnects and immediately adjust the experience. This could mean simplifying the interface, speeding up the process, or offering help.

4️⃣ Competitive advantage that matters In a world where everyone has access to similar products and services, customer experience becomes the main differentiator. Companies that first master multimodal personalization will build an advantage that will be difficult for competitors to catch up on.

Is This Really Possible Now?

Technologically – yes. Models like GPT-4o or Gemini 1.5 already support text, image, sound, and context analysis today. Frameworks for adaptive personalization (e.g., Contentful + AI, Adobe Sensei) are available.

Limitations? Implementation cost – full solutions are currently mainly available for larger companies. Required data and system integration – it’s not plug & play. Ethical and privacy challenges – collecting multimodal data must be 100% compliant with regulations and transparent.

But MVP? You can build one today, for example:

Your First Step into the Future.

Multimodal real-time content personalization is not a trend that will pass. It’s a fundamental change in how technology understands people. Companies that understand and implement this first will build a competitive advantage for years to come.

You don’t have to start with a full multimodal system. Start by analyzing how your customers behave. What signals do they send? What frustrates them the most? Where do you lose their attention? Then invest in simple behavior analysis tools – heatmaps, session recordings, sentiment analysis in comments.

This is the first step towards understanding your customers on a deeper level. And when you’re ready for more, multimodal AI will be waiting with open arms – ready to transform your business in ways you can only dream of today.

The future of personalization is not about showing more ads. It’s about being present at the right time, in the right style, with the right content. So that your brand is not only seen but felt. The question is not “if,” but “when” you will begin this journey.