The ultimate content intelligence platform for SEO & SGE.

Build powerful domain expertise

with intelligently planned & written content

Content strategy planner & advanced content editor with semantic models (NLP), Google SERP analysis, and competition data.

CONTADU helps you to plan, write and optimize content with user intent in mind!

Over 150 000 users & 2 000 000 content analyses

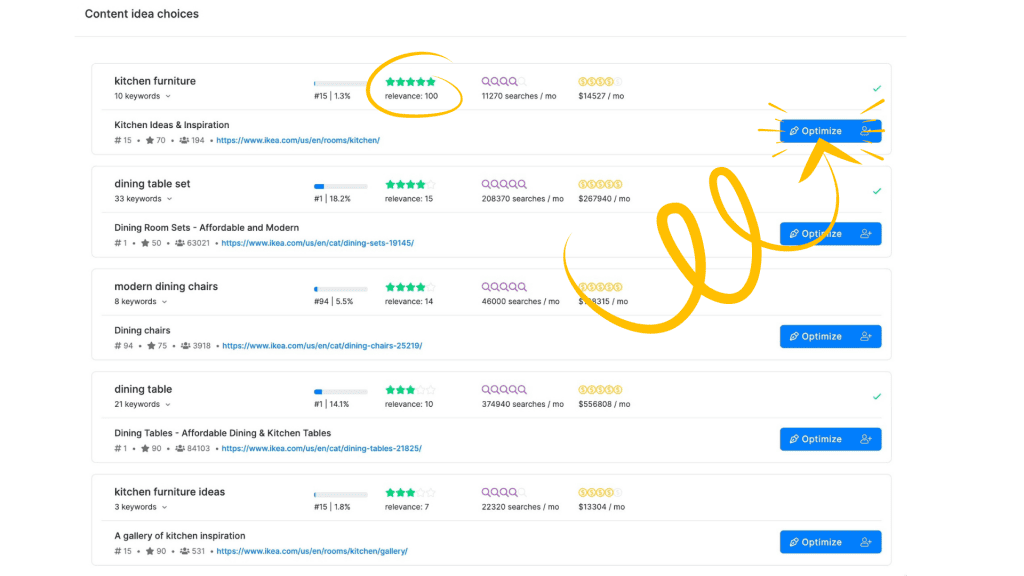

🏆 Topical Authority Planner

Use an automated list of tasks based on the semantic keyword clusters with coverage assessment as well as priorities divided by the content types.

👉 Act with confidence: know precisely which content to prioritize and schedule execution.

👉 Know your competitive position: check how well you and your competitors cover each cluster with content.

👉 Be effective & organized: easily schedule your work for the coming weeks, assign tasks, set deadlines, and work more efficiently than ever before.

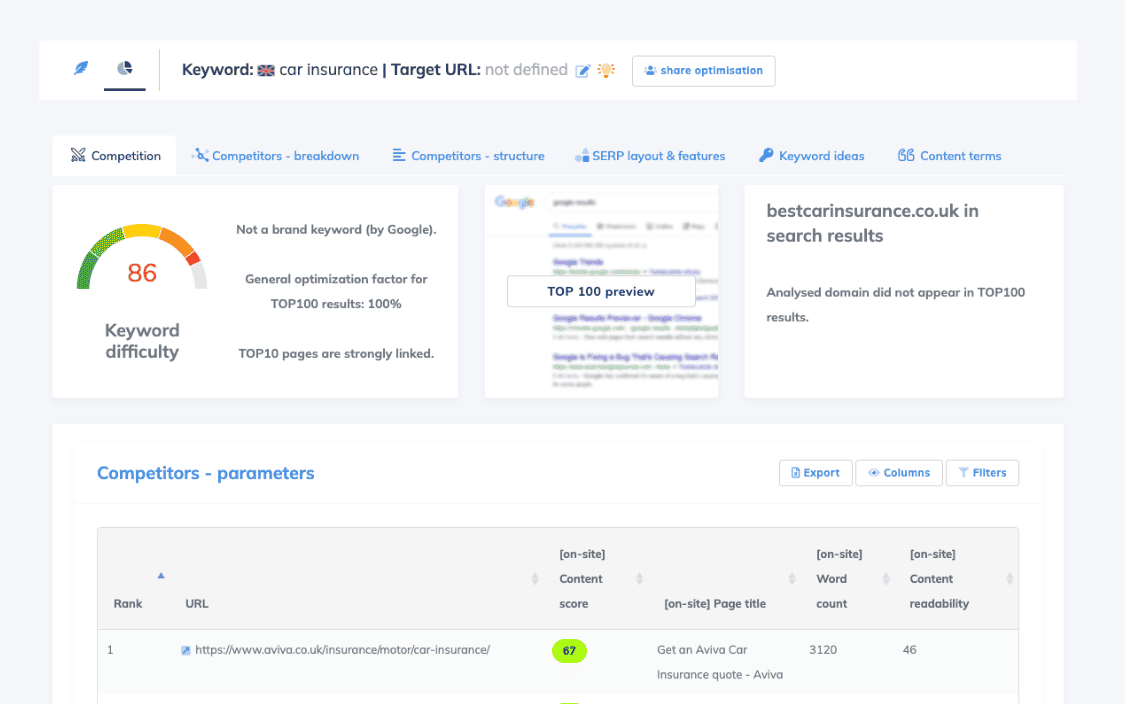

⚗️ Competitve Landscape

Make data-driven decisions, optimize your strategies, and stay ahead of the competition.

👉 Understand search intent: better grasp what users are truly looking for. Optimize or create new content to address that intent and improve search ranking.

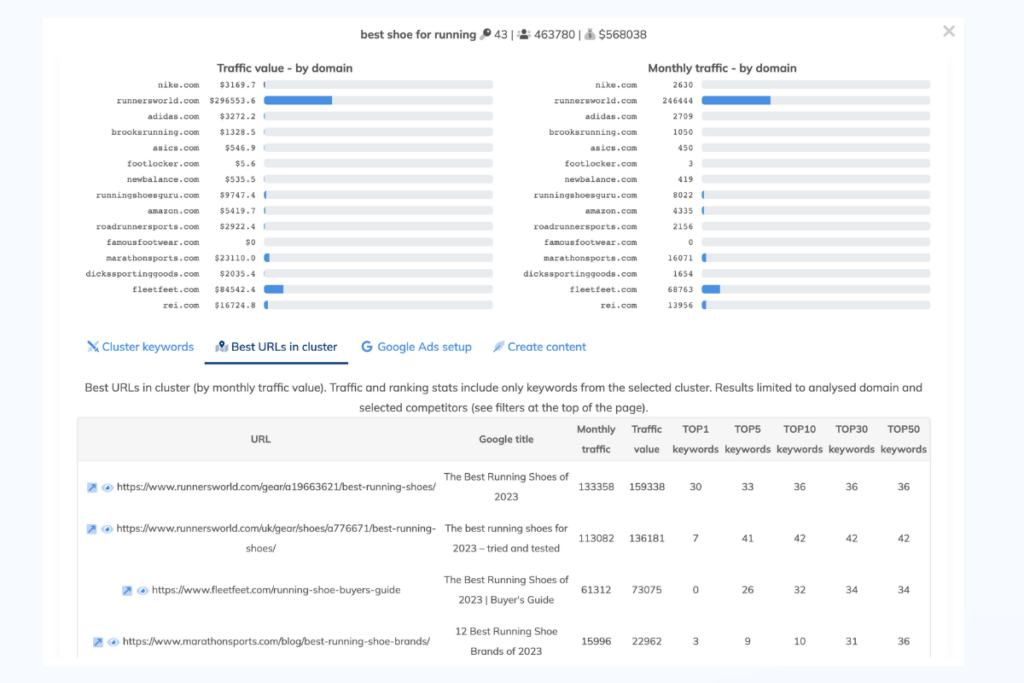

👉 Increase your market share: use analysis of topic clusters, user intent and top pages used by successful competitors. Understand their best practices, improve them and easily create the best content.

👉 Identify content gaps: Know what kind of content your competitors are ranking for. Identify potential content gaps, cover them effectively, and build your topic expertise.

🚀 Content Optimisation

Effortlessly increase your site’s visibility, traffic, user experience and competitiveness by optimizing your content with:

👉 Auto-optimization & auto-alt’s: work smarter, supplement your work with automated options and create great content faster.

👉 Internal linking suggestions: easily improve your website structure, navigation and overall SEO performance.

👉 Real-time content scoring: receive immediate feedback and make corrections to content as you write.

Content Strategy - AI based semantic experise

Topical authority with long-term content planning

Understand competitors strategy

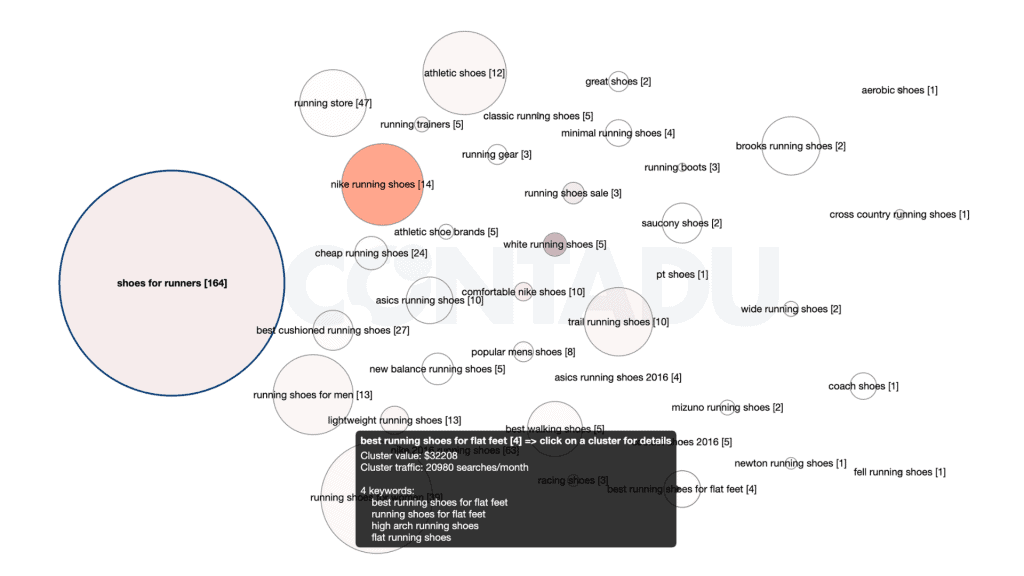

Identify the most relevant and popular topics in your niche and how your competitors are covering them. You can use this information to create content that fills the content gaps, outperforms your competitors, and attracts your target audience.

- Understand best competitive content

- Follow user intent coverage

- Find content with high sales value

- Audit own strategy

AI optimised content plan

Content plan in CONTADU helps you improve the quality, relevance, and performance of your content in long-term. You get easy instructions what content needs to be written or enhanced.

- Easy to follow list of proposed subjects

- New and existing content suggestions

- Easy share with copywriters

User intents mapped and clustered

User intent mapping help you optimize your content for SEO, conversion, and retention. By understanding content that meets the user’s intent, you can rank higher on search engines, attract more qualified leads, and build trust and loyalty with your audience.

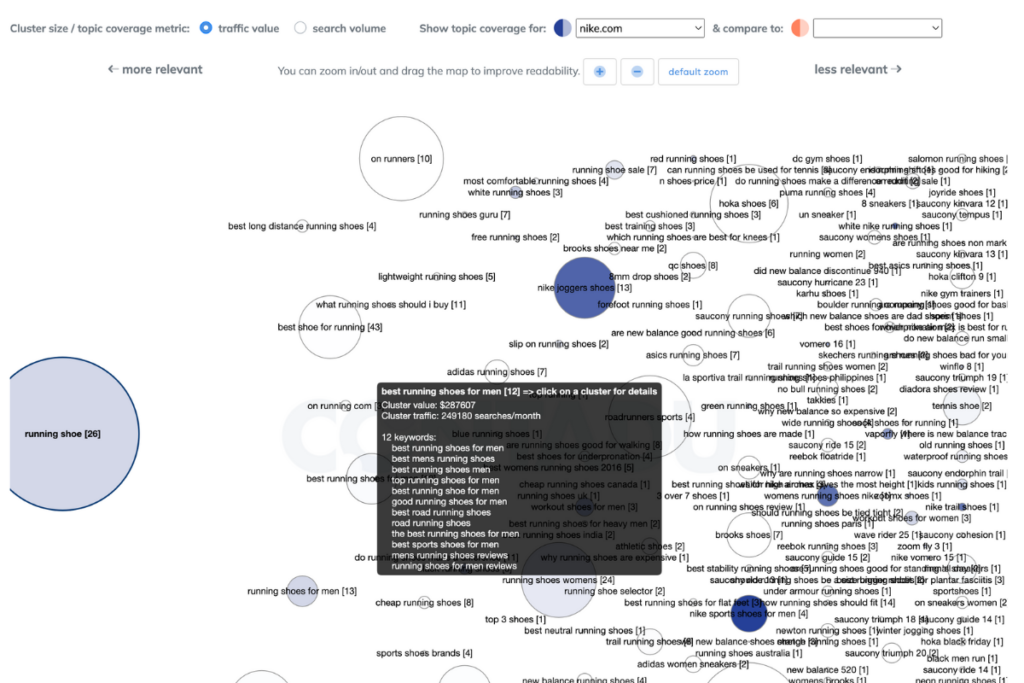

- Content “Topical landscape” visualisation

- Semantic map of user intents

- Traffic and value (CPC) clusters

- Understanding content gaps

Semantic & intent approach

Monitor, measure, and improve your content performance and quality in specific clusters. You can use multiple integrated modules as: backlinks monitoring, GSC integration, work statuses, and quality checklist to optimize your content for SEO and user satisfaction.

- Manage content in detailed view

- Monitor performance based on user intent

- Optimise site architecture

- Plan content distribution and promotion

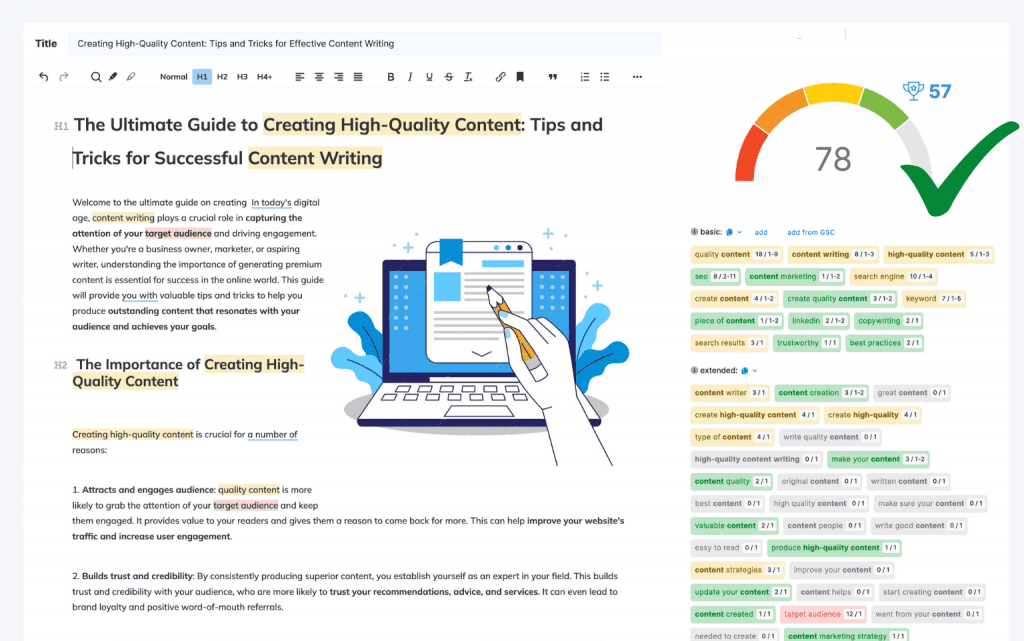

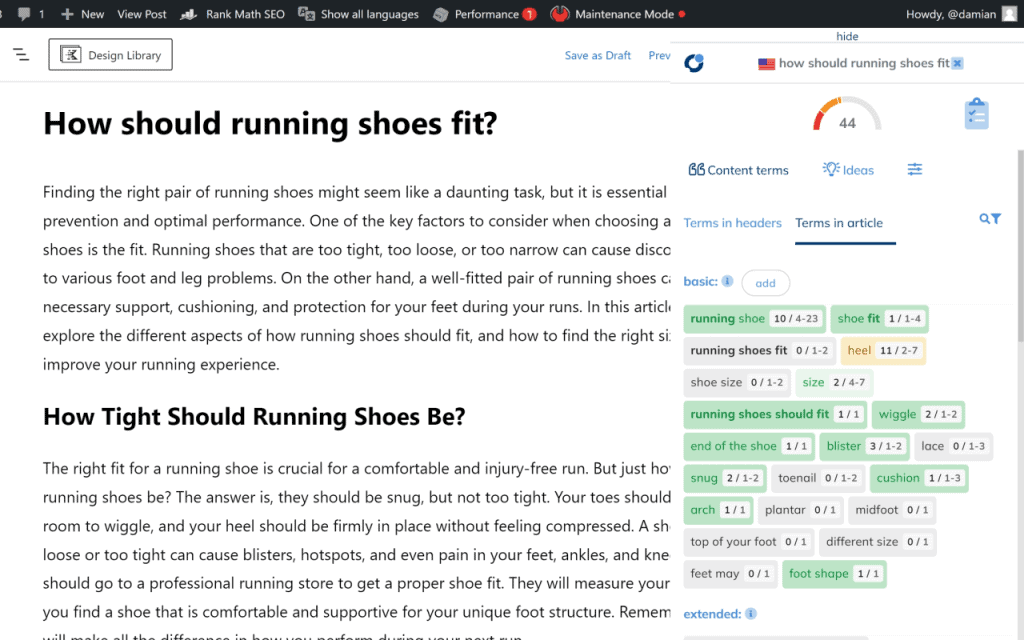

Content Editor - Semantical Expert At Your Service

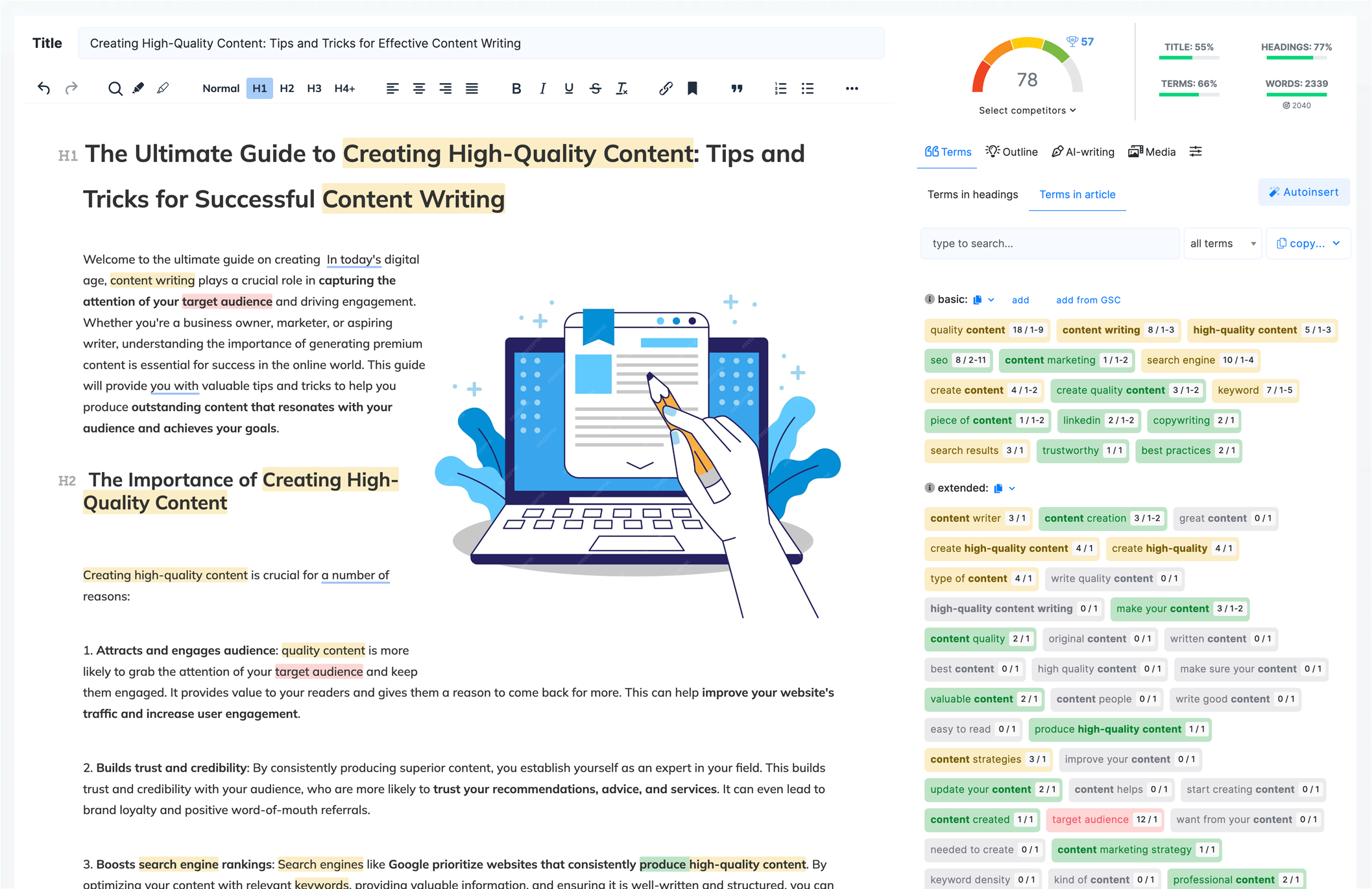

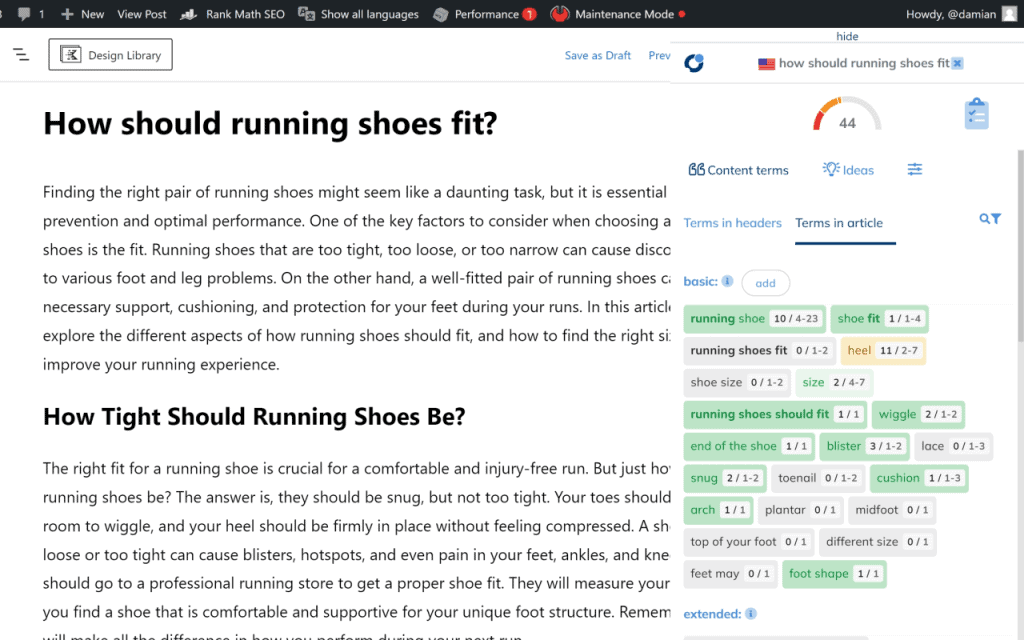

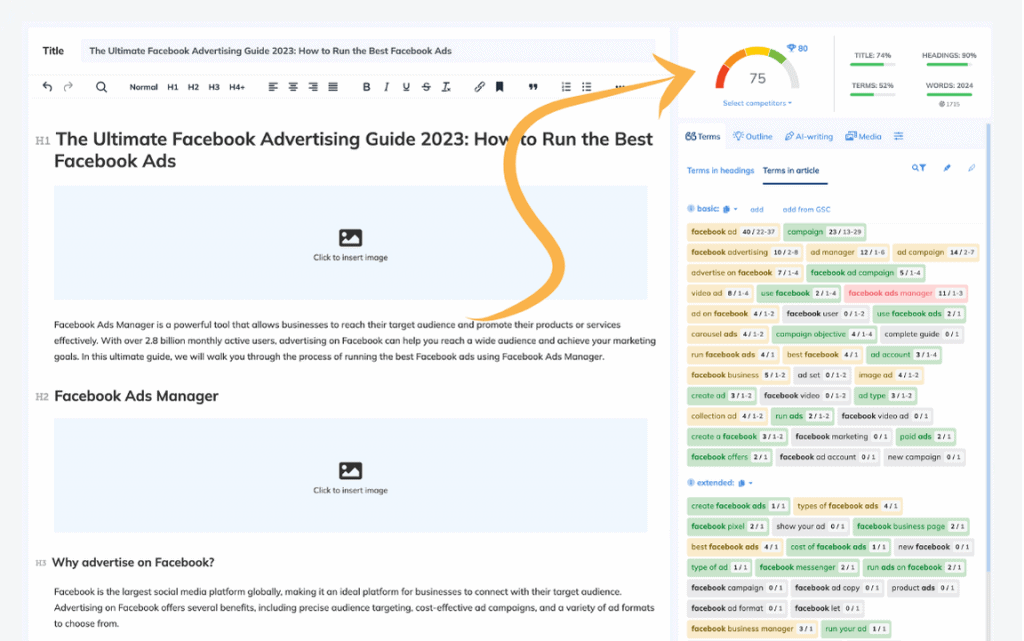

NLP-based recommendations for content

Content that ranks in Google

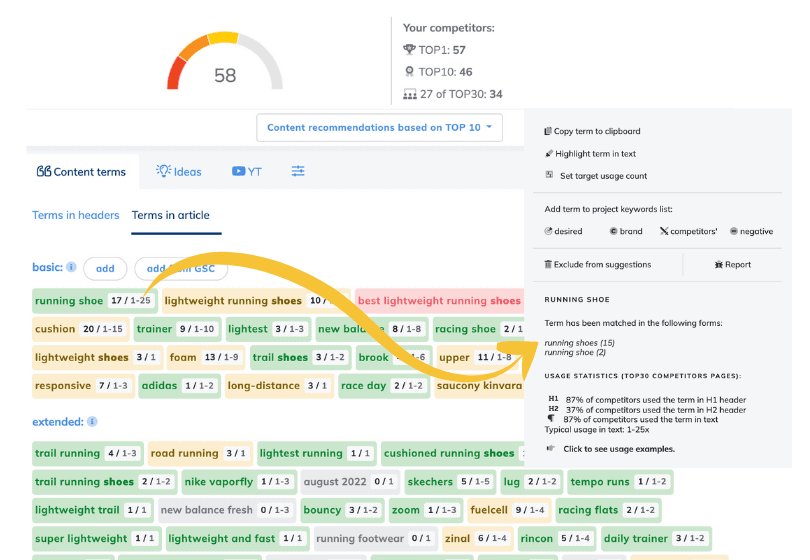

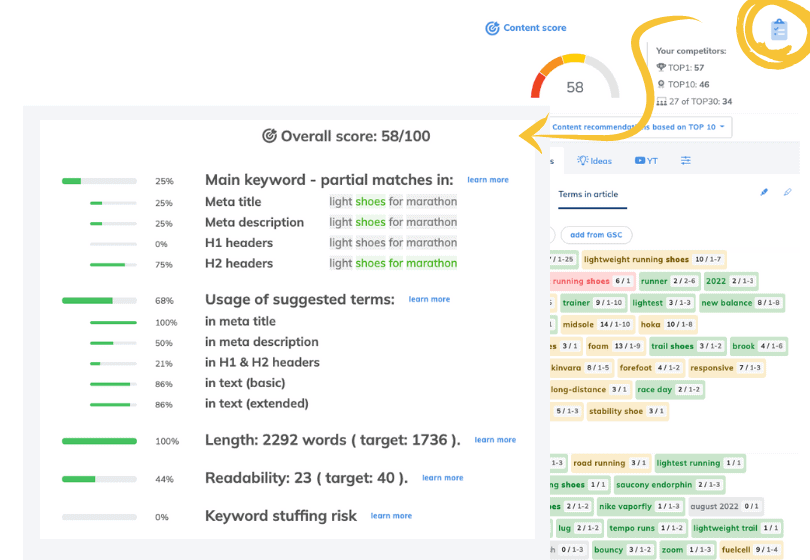

Use NLP terms – suggested words and phrases found from your top-ranking competitors in Google. Write with simple-to-follow NLP-based suggestions of terms to cover the topic with related terms.

- Get topic-relevant phrases

- Boost your content with NLP terms

- Cover topical knowledge gap

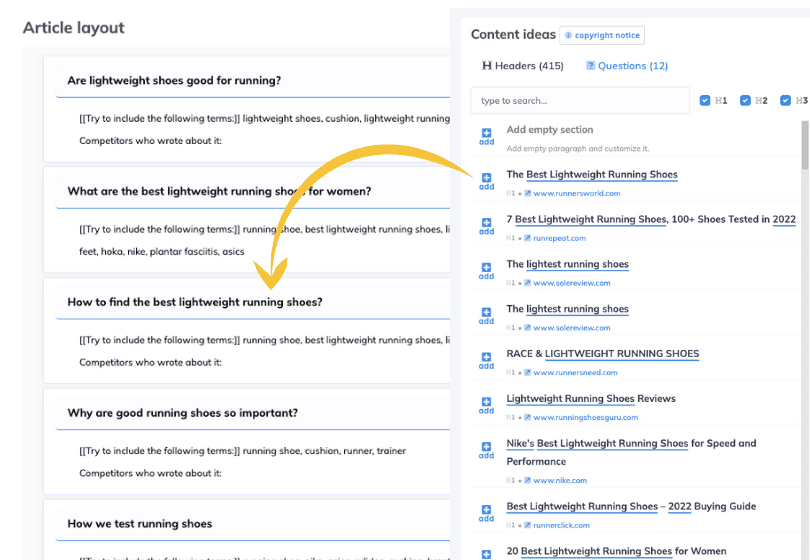

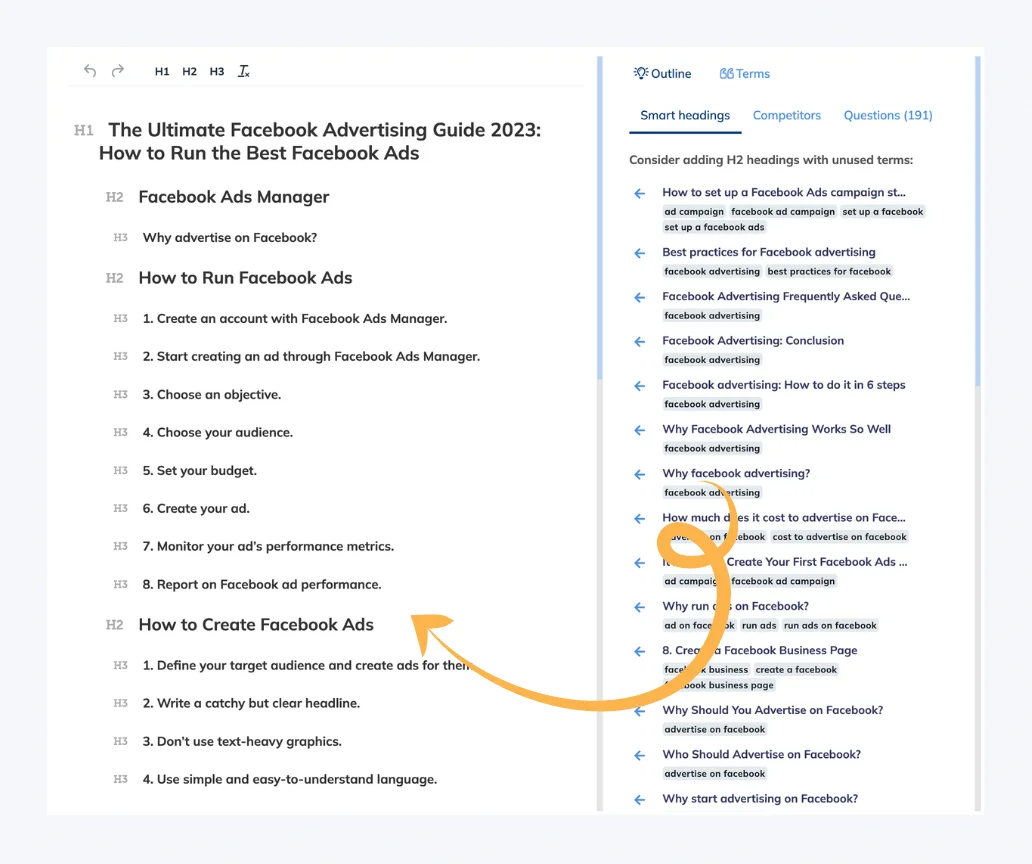

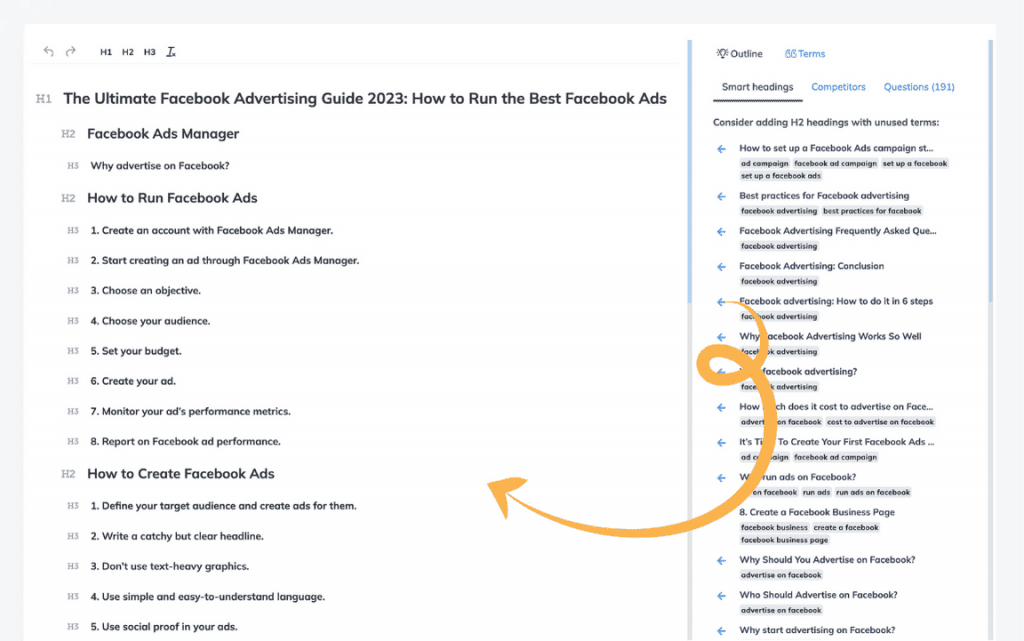

Article structure in minutes

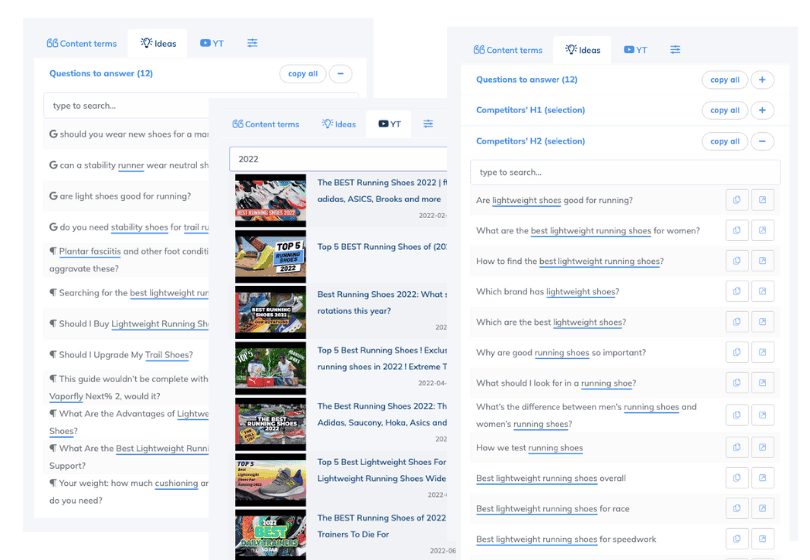

Increase your content creation productivity with the built-in DRAFT generator. Add headlines with ideas based on competitors’ best headlines and relevant questions from Google.

- Quickly create a content outline

- No time spent on research

- Competitors insights

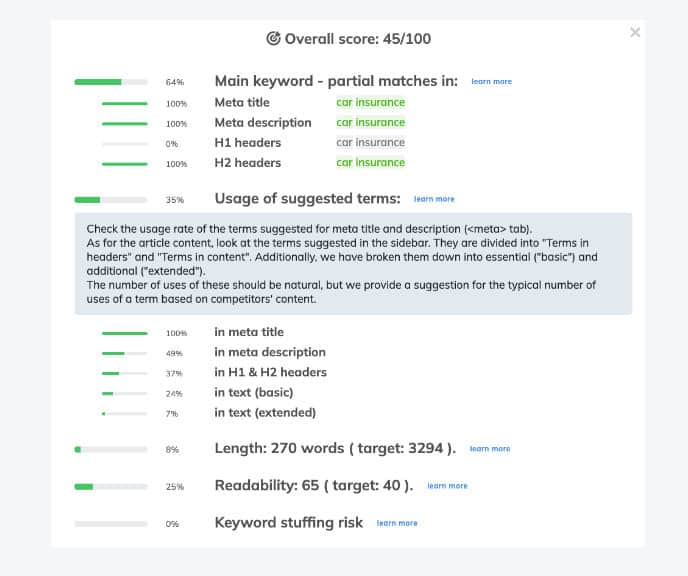

Optimize content to rank high

Monitor key text parameters and overall text content quality with Content Score. It’s based on key SEO factors and works very intuitively. The more points you achieve, the more effectively optimized the content. Seems pretty simple.

- Score points for good content

- Easy-to-follow checklist

- Compare with any URLs from TOP30

Discover users intent

When you’re stuck on an article and don’t know what to write next, look at the relevant ideas section. They can serve as excellent inspiration for the following article section or even for your next publication.

- Find questions related to topic

- Explore competitors headers

- Get ideas from Youtube

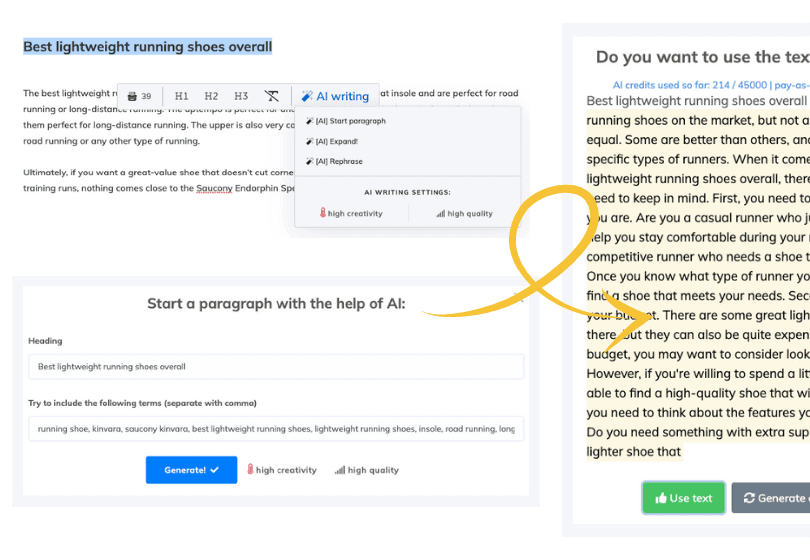

Save your time, when needed

Use the help of an AI writer to write paragraphs. With the most well-known and advanced AI GPT commercial language models, be sure that you can create good content that is relevant to your topic.

- Save your time on writing

- No longer have writer’s block

- Use built-in AI templates

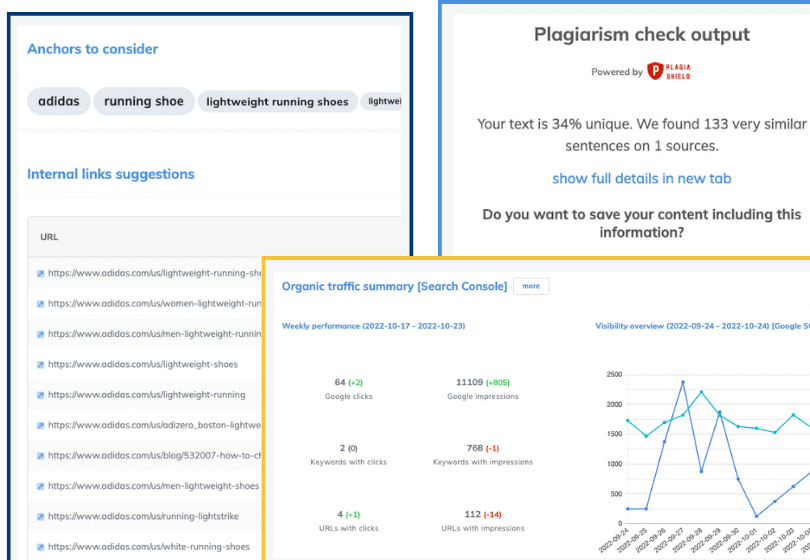

Additional features…

Use extra toolset to void submitting plagiarized work, get suggestions how to build link structure and easily monitor information about your site’s organic traffic up to date and thats not all… at all.

- Plagiarism checker

- Internal linking

- GSC & WP integration

- Chrome extension

Plan & Manage Content In An Easy Way

Cover whole process to build topical authority

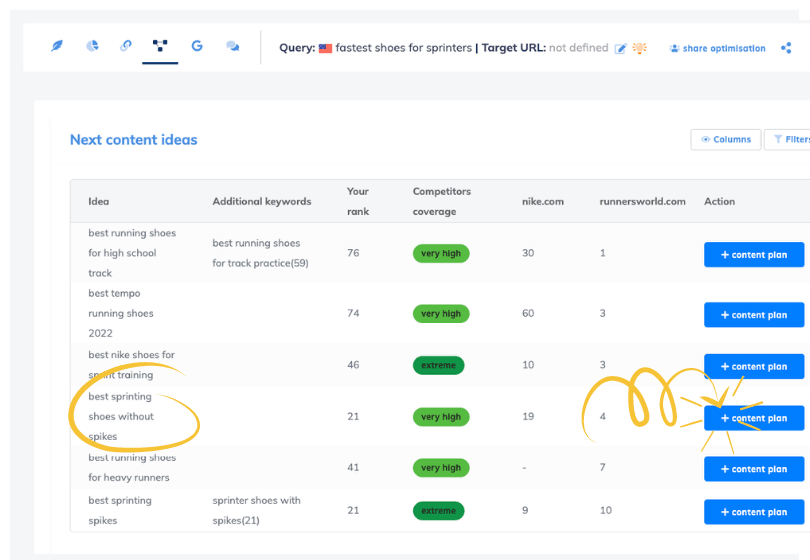

Relevant “next content” ideas

Explore ideas related to the topic for your next articles. Save hours on brainstorming and planning while creating more valuable content for your audience.

- Get ideas based on your own and competitors’ content.

- Relevant content, covering user needs

- Save time, plan in months ahead

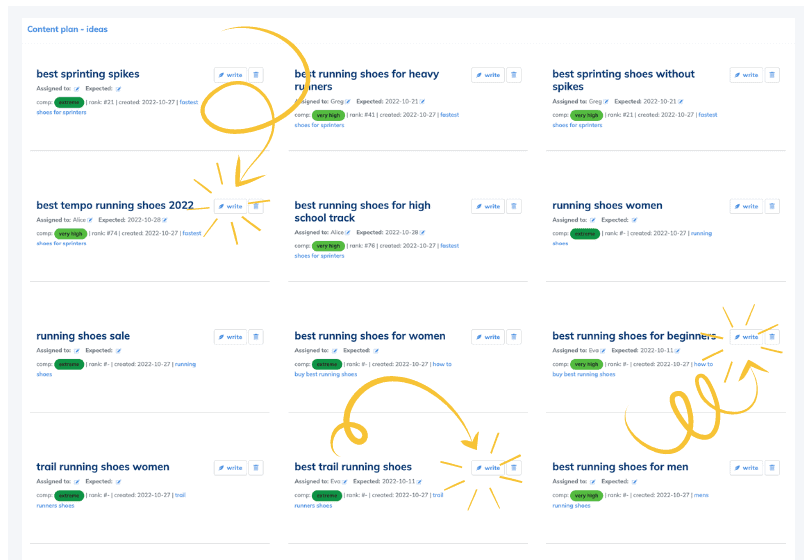

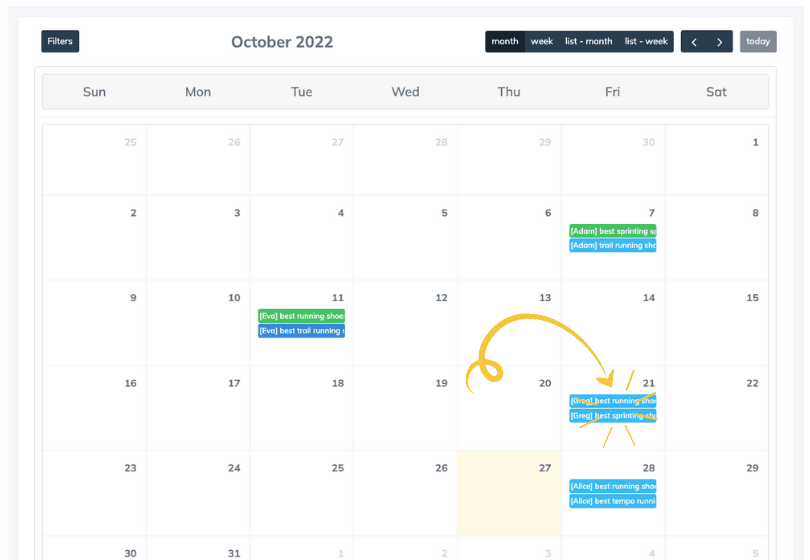

Long-term publication plan

Enrich communication and plan more high-demanded content. The topic authority grows as you add more relevant and informative content. With more related content, your topical authority and website quality score will increase.

- Enrich and complete the topic

- Create valuable content

- Build topical authority

Manage and execute with ease

A clear planner with task lists and statuses helps both the copywriter and the supervising team track and see the progress in collaborative writing work.

- A clear list of content to optimize or produce

- Responsibility and deadlines

- Statuses, progress, and tagging

Publish and integrate with easy

With Chrome extension you are able to edit content directly in Shopify, Google Docs and WordPress. For WordPress you can use as well native API WordPress integration to import and export specific posts and pages. If you like to work with chatGPT like interfaces, CONTADU allows exporting recommendations in a form of AI prompts.

- Chrome extension (WordPress, Google Docs & Shopify)

- WordPress API integration

- Export (text, HTML, chatGPT prompts)

01

Understand user intent and the type of content needed

Start to plan the right page type for a certain user query. For some queries blog article will be needed, for other category description.

02

Choose direct competitors with similar type of content

Select the right competitors with high content score to increase quality of semantical recommendations.

03

Plan document structure based on useful information

04

Optimise and enrich content with semantical SEO

Create Content Effortlessly With Latest GPT Engines

Content Designer & AI templates

Do you want to accelerate your work, also on a large scale?

Thanks to Content Designer and easy-to-use templates, you can quickly create high-quality content using AI. Combining term recommendations, structure optimization, and advanced AI templates, you are able to quickly achieve a high content score. The latest GPT engines are at your disposal, generating hundreds of thousands of words per day.

Master SEO Optimisation Process

High-ranking content

It takes a lot of time and effort to rank your content?

CONTADU will help you research articles related to your niche and get the content you need with easy-to-follow recommendations.

Analyze competitors’ top-rated content, YouTube content or preferred Google SERPs, and answer the problems your readers are facing.

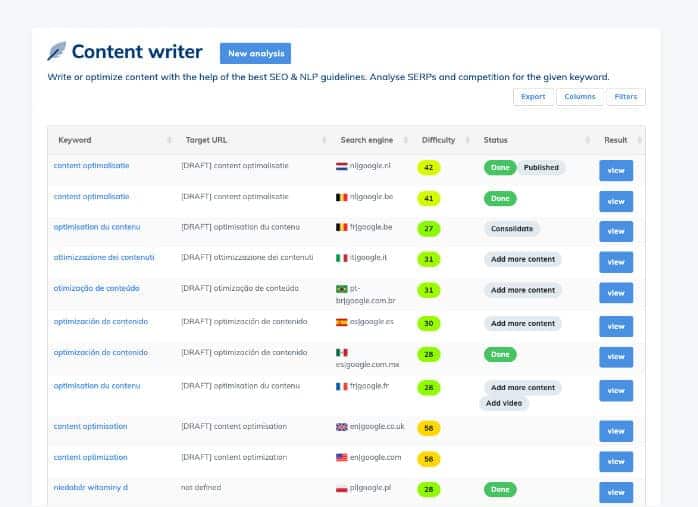

Easy Document Management

Plan and tag all your work

Content repository will help you to set priorities based on market trends, tag and group analyses and share them with external copywriters.

Mark content as done and export analyses with custom columns for easy reporting. You can use filters too.

How NEURON Helps You To Get More Traffic?

Easy content planning and optimization

process

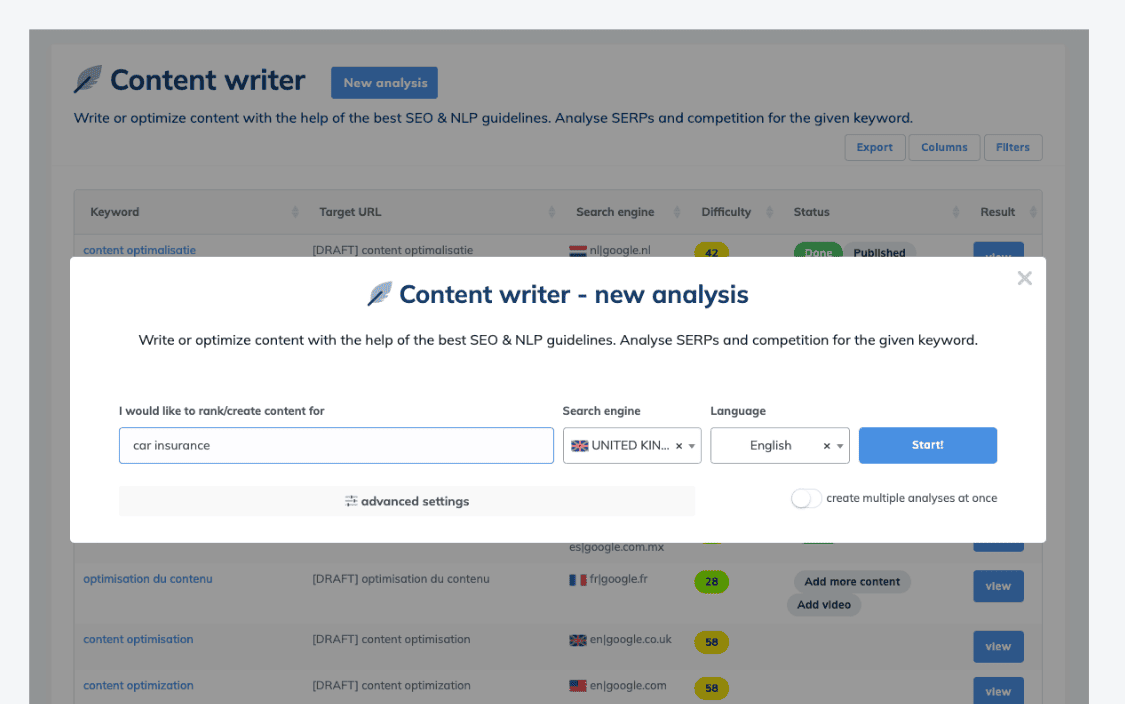

Start with one keyword

you want to rank for in Google

Competitors' analysis

is ready in a few seconds

Create a document draft

including popular questions & topics

Use recommendations

& GPT AI to write the best content

Start with one keyword

you want to rank for in Google

Competitors' analysis

is ready in a few seconds

Create a document draft

including popular questions & topics

Use recommendations

& GPT AI to write the best content

Contadu Pricing Plans

Invest In Content Intelligence

In every plan: Generative AI templates, Advanced Draft mode, Unlimited sharing & team members, WP & Chrome extension, etc.

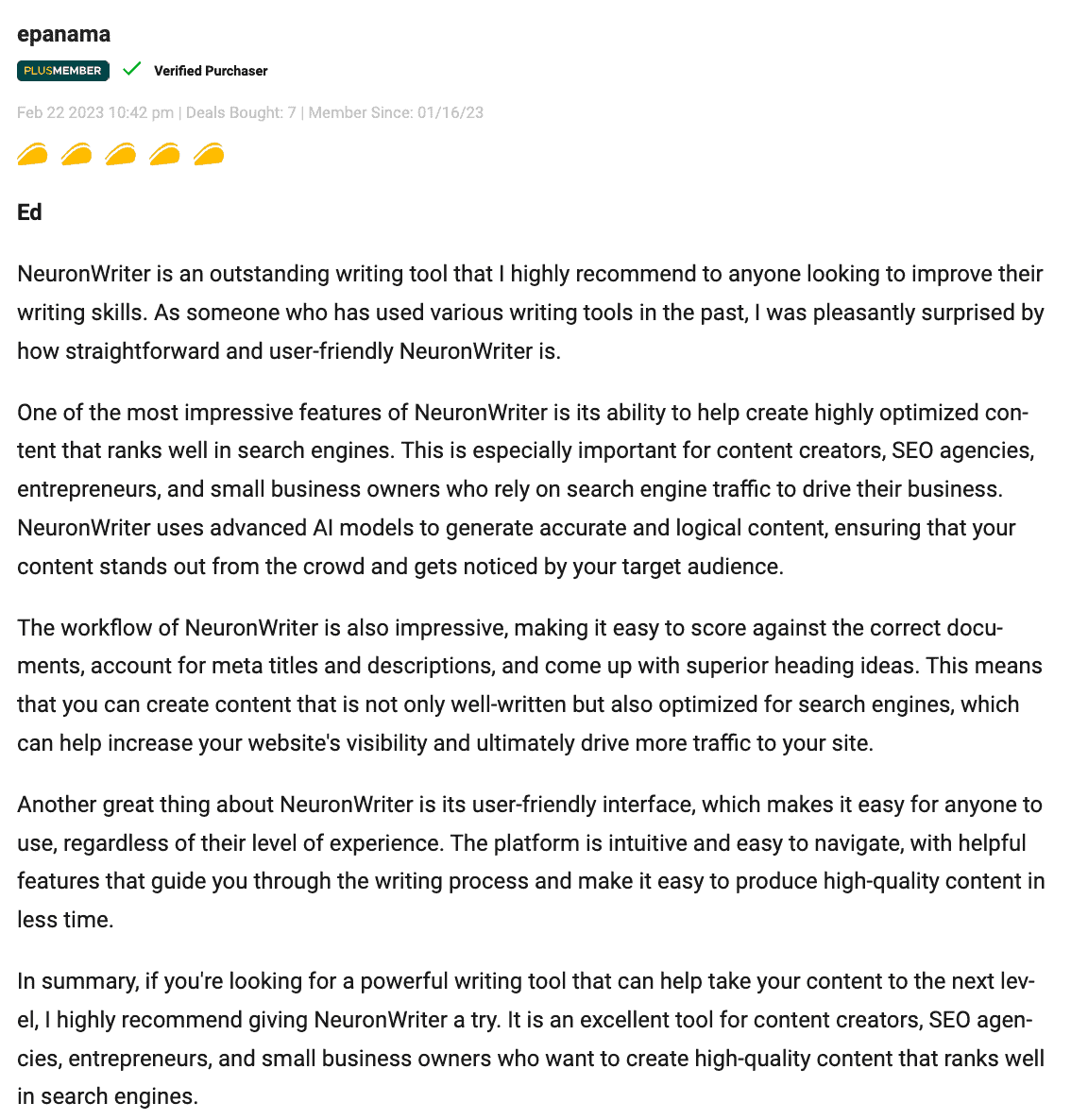

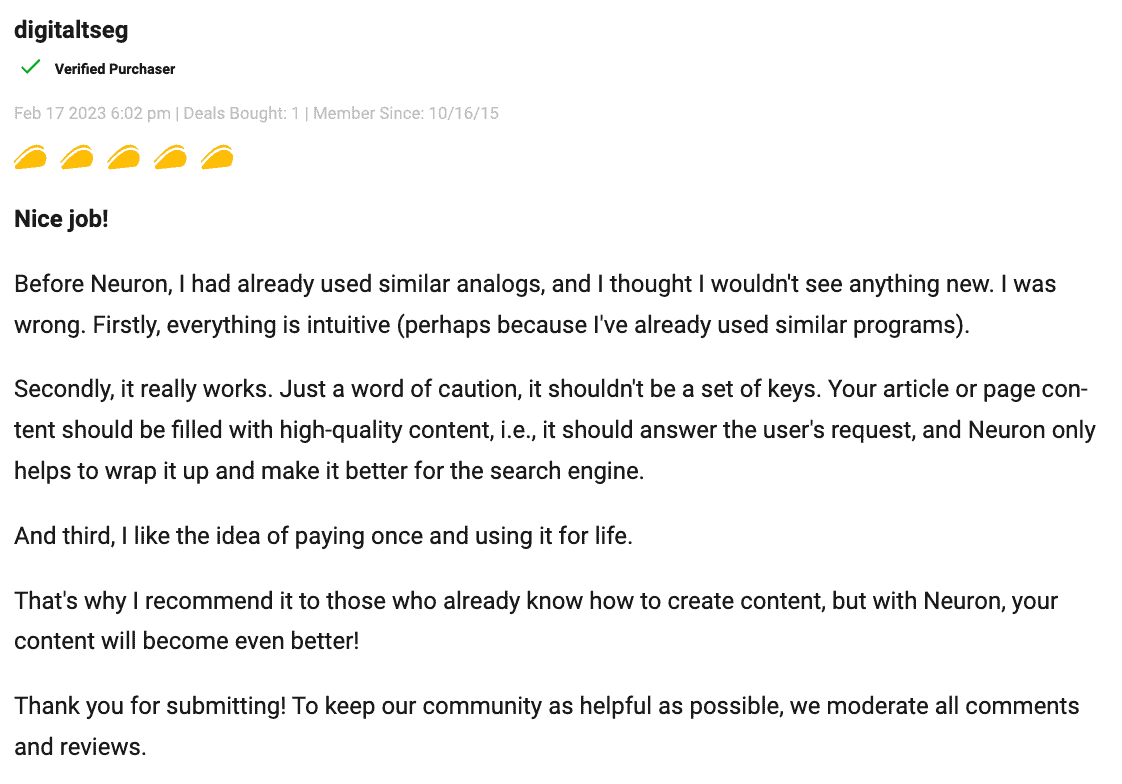

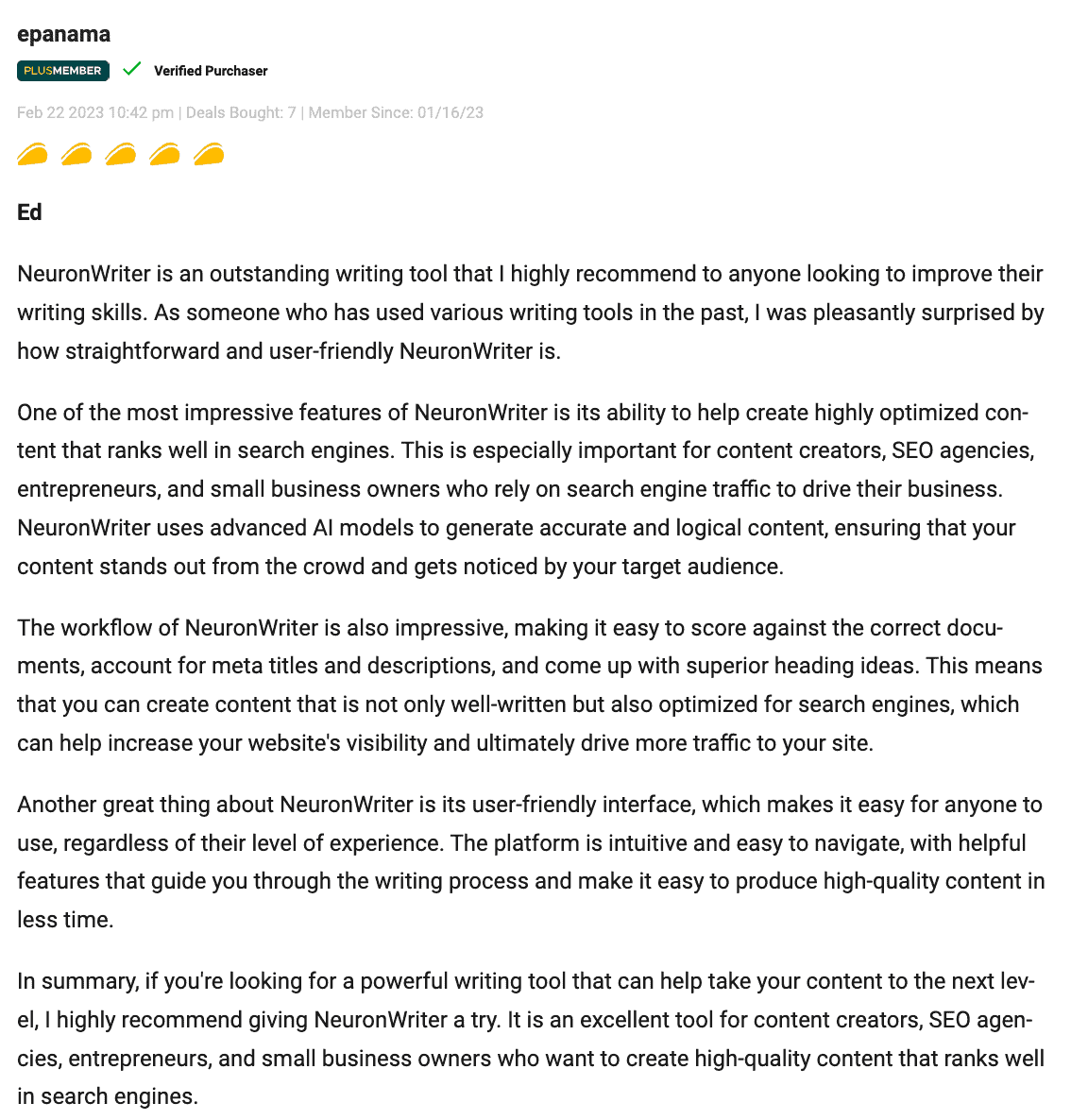

User Feedback

Happy users make us proud 😉